Google has quietly started phasing out the &num=100 search parameter, a small technical tweak that could have a big impact on how SEOs track rankings.

For years, appending &num=100 to a Google search URL has forced the SERP to display up to 100 organic results on a single page instead of the default 10. It has been a hidden but powerful feature, especially for rank tracking tools and SEOs doing manual checks.

But over the last week, many SEOs have noticed that &num=100 is no longer working reliably. Sometimes it returns 100 results. Other times, Google simply ignores it and shows the standard 10.

It might seem minor, but it is a big deal for the way our industry gathers data.

How &num=100 Has Been Used by Rank Trackers

Tools like SEMrush, Ahrefs, Moz and countless custom-built scrapers often rely on Google’s HTML results to monitor keyword positions.

When you can load 100 results on one page, you can grab all positions 1 to 100 with a single request. That makes it faster, cheaper and more reliable to track large keyword sets at scale.

It also lets SEOs see the long tail of results beyond page one, identifying pages bubbling up around between positions 40-90 that could be pushed higher with optimisation.

When you remove that capability, everything slows down. Rank trackers must now fetch 10 pages to see the same amount of data, increasing costs and the risk of blocks or rate limits.

What Is Changing

Google has not officially announced this change, but it is clear the parameter is being tested for removal. According to Barry Schwartz at SERoundtable, the &num=100 parameter currently only works “about 50% of the time,” with behaviour differing across accounts, sessions and regions.

Even with &num=100 manually appended, Google often defaults back to 10 results per page. It seems to be rolling out gradually and inconsistently across accounts and regions, which is typical of Google tests.

While there is no clear reason yet, it could be part of a broader move to:

-

Reduce scraping load on their infrastructure

-

Encourage more clicks and ad exposure per SERP

Either way, the technical door we have used for decades is closing.

In addition, multiple rank tracking tools are adjusting pricing models and limits: positions 1-20 will generally be included in standard plans; positions beyond 20 are being treated as premium data. This reflects the increased cost and load needed to fetch and report on deeper result sets.

Why This Matters for SEOs

If this sticks, it changes how rank tracking works at its core.

It isn’t just because of the removal of &num=100: Some SEOs have also reported an increase in CAPTCHAs and anti-bot blocks when trying to collect more than 10 results at once. This could further complicate automated rank tracking as tools attempt to pull more pages to reach positions beyond the top 10.

Tools will not stop working, but they will lose efficiency and possibly accuracy. Getting visibility on positions beyond the top 10 will require more scraping requests and more time. This could:

-

Make rank tracking data lag behind reality

-

Increase tool costs (which may get passed on to users)

-

Reduce visibility on emerging pages that have not cracked the top 10 yet

In short, tracking broad keyword sets just became more difficult.

For agencies and in-house teams relying on rank trackers to monitor thousands of keywords daily, this could become a real operational headache.

Many SEOs may welcome that focus shifting: tracking everything beyond position 20 often yields noise, with more effort than actual impact. If rankers only need to worry about whether terms are in (or moving toward) top 20, then clarity and cost control improve.

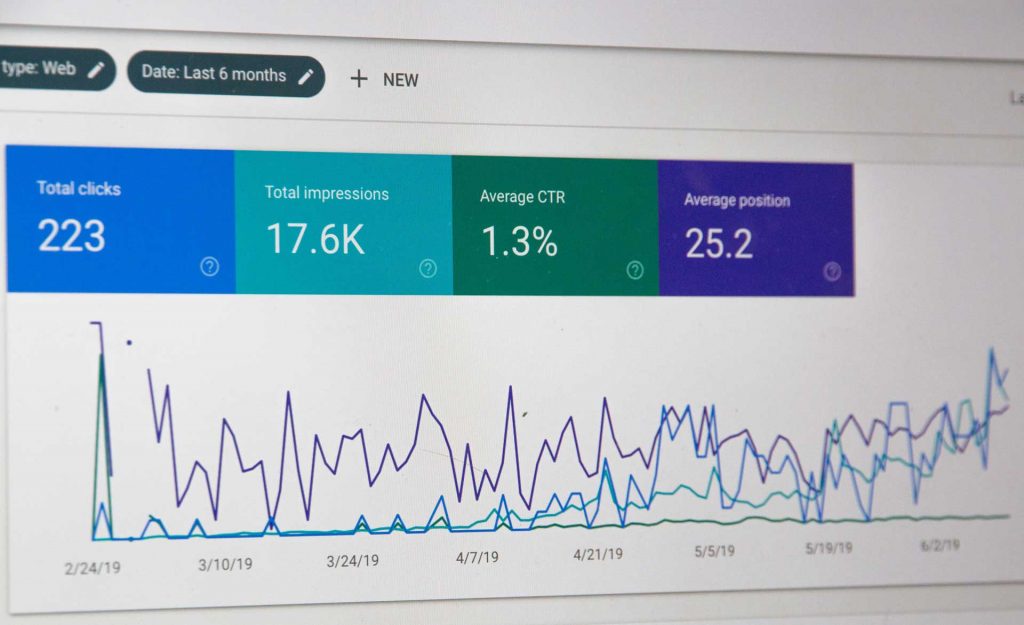

How This Has Impacted GSC Reporting

According to Brodie Clark, many sites have observed sharp drops in impressions in Google Search Console since &num=100 began failing. Keywords that were previously counted — often those ranking on page 2-3 or beyond — are no longer visible in reports. This has also improved average position metrics for many sites, not necessarily because their rankings improved, but because many lower-visibility terms (positions 21-100) are no longer being loaded (hence no impressions counted).

SearchEngineLand data backs this: across hundreds of sites, most are seeing drops in both impressions and number of unique ranking terms. Historic comparisons (month-on-month or year-on-year) must now account for this shift, otherwise results may misleadingly look worse or better.

Our Take

At RedCore Digital, we see this as another sign of the direction Google is heading: less transparency & less data access.

While this change does not kill rank tracking, it chips away at one of the most efficient methods we have had for gathering organic position data.

Unless Google rolls it back, rank trackers will need to adapt. They will likely do this by finding new ways to aggregate position data from multiple queries, or by relying more heavily on clickstream panels, browser extensions and other non-Google data sources.

For SEOs, it is a reminder that our data is always borrowed and never guaranteed. The goalposts can and do move overnight.

What You Should Do

-

Check your tools: Test whether your rank tracking tool is still pulling 100 results

-

Refocus on priority keywords: With less accurate long-tail data, prioritise the keywords that actually drive revenue

-

Stay updated: This might still be a test, so watch for an official statement or further rollout